This post is an introduction on Kroll Artifact Parser and Extractor (KAPE) and how it can be used to collect evidences.

When a malware is executed, it usually leaves some evidences about its execution. These are important details used for investigation and forensics. KAPE is an evidence acquisition tool and can be used to acquire all the evidence of execution from the victim's system.

The KAPE directory has two main folders. Modules and Targets.

KAPE Modules.

The modules directory contains different directories and inside it, we can see the module files with .mkape file extension. That is how the modules are identified. These modules defines how to process the collected evidence, can use scripts or the installed applications. To view all the available modules, browse the Kape directory or execute from the command line.

Run kape.exe --mlist . to list all the modules. It is also mentioned in the .mkape file on how to format and store the evidence output.

The bin folder contains the executable that the modules use in-order to process the collected evidence. When a module is selected to run, it will check the bin folder or the path mentioned in the module. For better understanding, see Example 4.below.

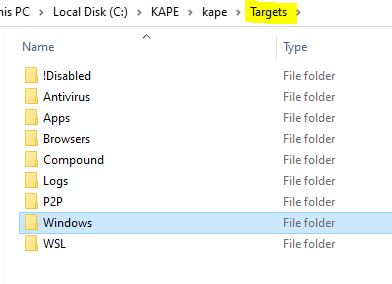

KAPE Targets.

The target defines what information needs to be collected as the evidence. Targets are found in the target folder with .tkape file extension.

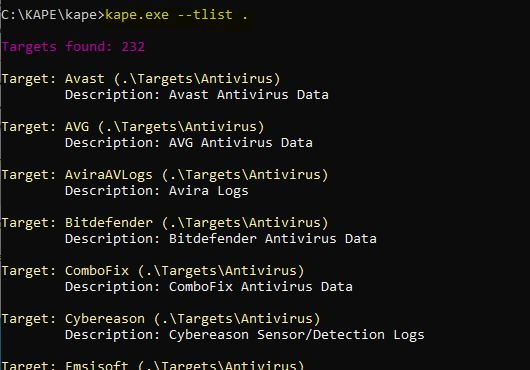

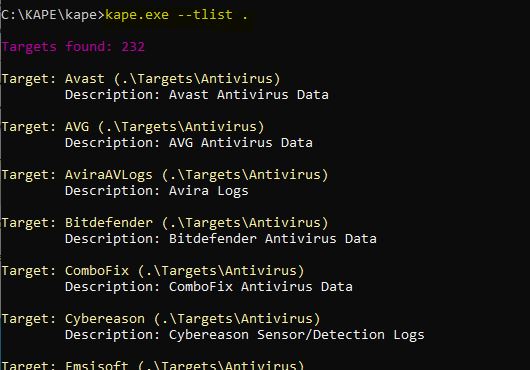

To list all the available targets,

Run kape.exe --tlist .

Now, let's talk about the KAPE command syntax.

Frequently used options are --tsource,-- tdest, and --target. However, there are a lot of granular options, that you can see just my running kape.exe from the CLI.

--tsource : Target source drive to copy files from (C, D:, or F:\ for example)

--target : What configuration to use. Or what to collect? In the following example, i have used the target "RegistryHives", It is possible to specify multiple targets using a comma.

--tdest : Destination directory to copy files to. Where to save the collected data.

Example 1:

In this first example, I want to collect the Filesystem evidences from a victim machine.

--target , is the Filesystem evidence.

--tdest , is the thumb-drive i have attached to the victim's machines for collecting evidence.

Once the command is executed, the results will be stored in the attached thumb drive. This evidence can be used for further investigation and analysis.

Example 2:

In this second example, my objective is to collect the information related to the Filesystem and Eventlogs.

kape.exe --tsource C: --tdest Z:\Ex1\ --tflush --target FileSystem,EventLogs --vss --vhdx Ex1

--tflush : Delete all files in 'tdest' prior to collection.

--vss : Malware could be hidden in shadow copies. This option Process all Volume Shadow Copies .

--vhdx : option to create a VHDX file. The collected evidences will be stored in a virtual volume.

Make sure the executable is present in the bin directory. If it's not, the module will not run. So in this example, the FullEventLogView_Security will use the FullEventLogView.exe executable to process the information from the collected evidence.Which mean first the evidence is collected as per the Target configurations and then the module will run.

The target destination and the module source should be the same, because the module uses the collected evidences. Here i use the target Eventlogs and module FullEventLogView_Security. We can also notice that the command is automatically generated based on the settings.Click on Execute to run. The command is executed and status will be shown in a terminal window. Notice how the module is using the executable to parse the evidence.

Once the operation is completed, we can see the final processed data in the specified format.